Two Moons

The following example is a binary classification domain adaptation issue. The goal is to learn the classification task on the target data (black points) knowing only the labels on the source data (red and blue points).

The following methods are being tested:

[1]:

import os

import adapt

import numpy as np

import pandas as pd

import tensorflow as tf

import matplotlib.pyplot as plt

import numpy as np

from sklearn.preprocessing import OneHotEncoder

from sklearn.decomposition import PCA

from sklearn.manifold import TSNE

from sklearn.metrics import accuracy_score

from sklearn.datasets import make_moons

from tensorflow.keras import Model, Sequential

from tensorflow.keras.optimizers import Adam, SGD, RMSprop, Adagrad

from tensorflow.keras.layers import Dense, Input, Dropout, Conv2D, MaxPooling2D, Flatten, Reshape, GaussianNoise, BatchNormalization

from tensorflow.keras.constraints import MinMaxNorm

from tensorflow.keras.regularizers import l2

from adapt.feature_based import DANN, ADDA, DeepCORAL, CORAL, MCD, MDD, WDGRL, CDAN

Setup

[2]:

def make_moons_da(n_samples=100, rotation=30, noise=0.05, random_state=0):

Xs, ys = make_moons(n_samples=n_samples,

noise=noise,

random_state=random_state)

Xs[:, 0] -= 0.5

theta = np.radians(-rotation)

cos_theta, sin_theta = np.cos(theta), np.sin(theta)

rot_matrix = np.array(

((cos_theta, -sin_theta),

(sin_theta, cos_theta))

)

Xt = Xs.dot(rot_matrix)

yt = ys

return Xs, ys, Xt, yt

[3]:

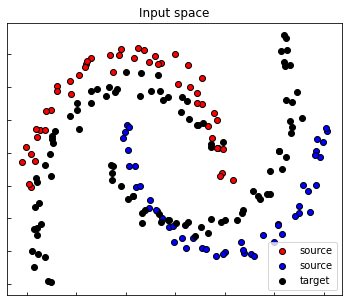

Xs, ys, Xt, yt = make_moons_da()

x_min, y_min = np.min([Xs.min(0), Xt.min(0)], 0)

x_max, y_max = np.max([Xs.max(0), Xt.max(0)], 0)

x_grid, y_grid = np.meshgrid(np.linspace(x_min-0.1, x_max+0.1, 100),

np.linspace(y_min-0.1, y_max+0.1, 100))

X_grid = np.stack([x_grid.ravel(), y_grid.ravel()], -1)

fig, ax1 = plt.subplots(1, 1, figsize=(6, 5))

ax1.set_title("Input space")

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend(loc="lower right")

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

plt.show()

Network

[4]:

def get_task(activation="sigmoid", units=1):

model = Sequential()

model.add(Flatten())

model.add(Dense(10, activation="relu"))

model.add(Dense(10, activation="relu"))

model.add(Dense(units, activation=activation))

return model

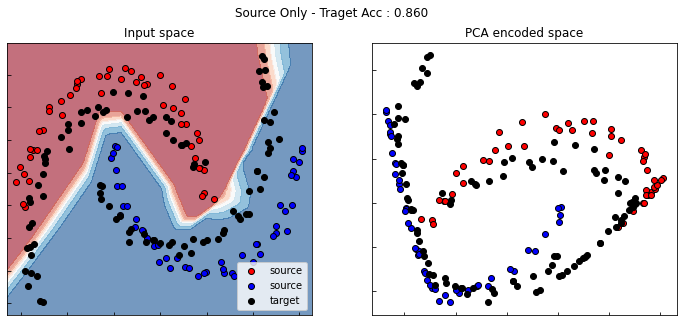

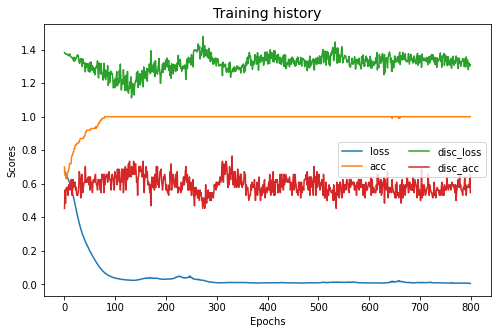

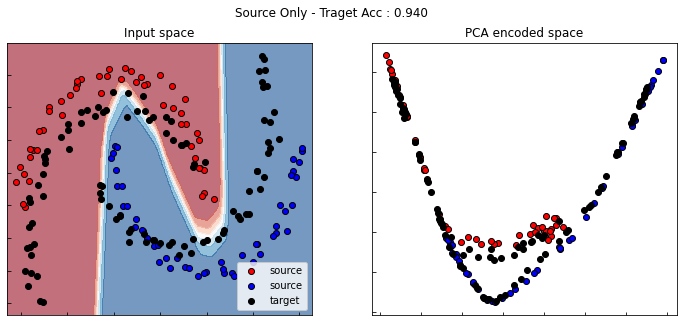

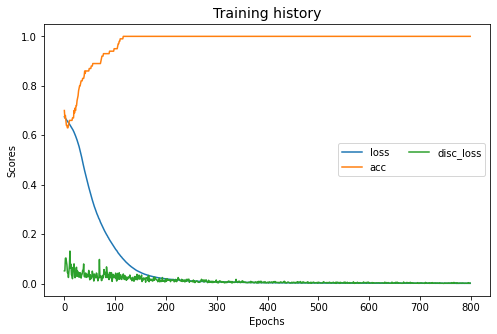

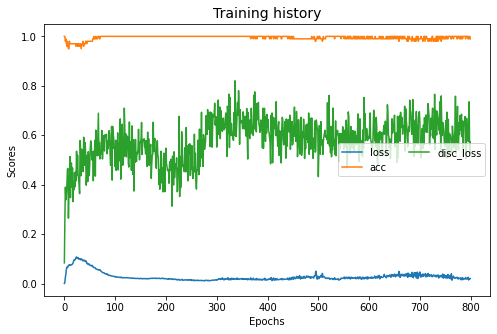

Source Only

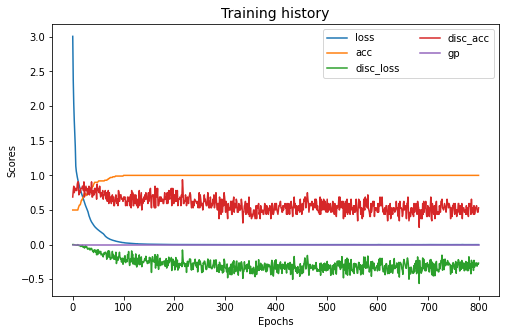

For source only, we use a DANN instance with lambda set to zero. Thus, the gradient of the discriminator is not back-propagated through the encoder.

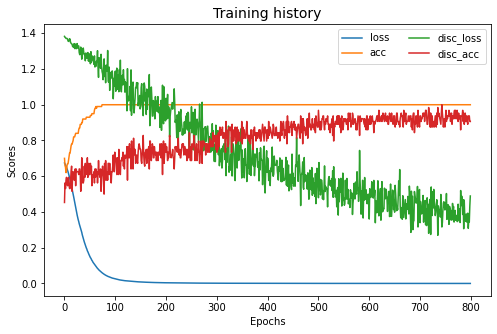

[5]:

src_only = DANN(task=get_task(), loss="bce", optimizer=Adam(0.001, beta_1=0.5),

copy=True, lambda_=0., metrics=["acc"], gamma=10., random_state=0)

[6]:

src_only.fit(Xs, ys, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(src_only.history_).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

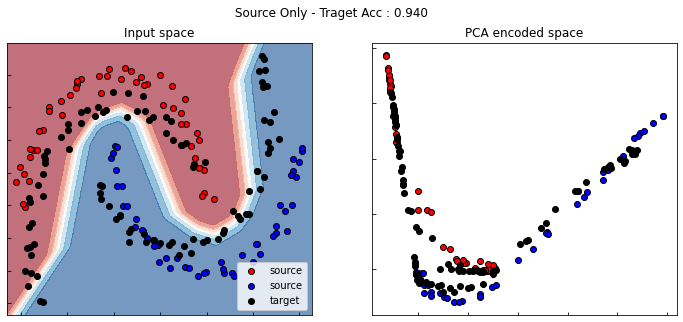

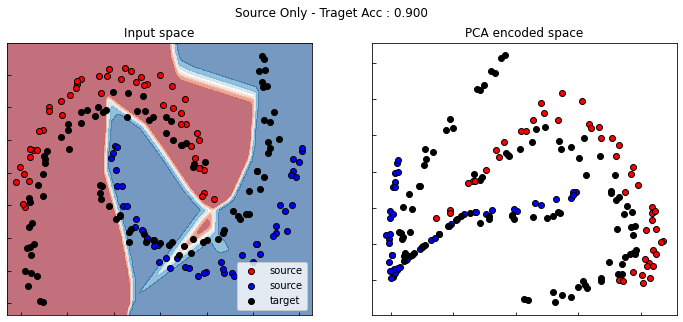

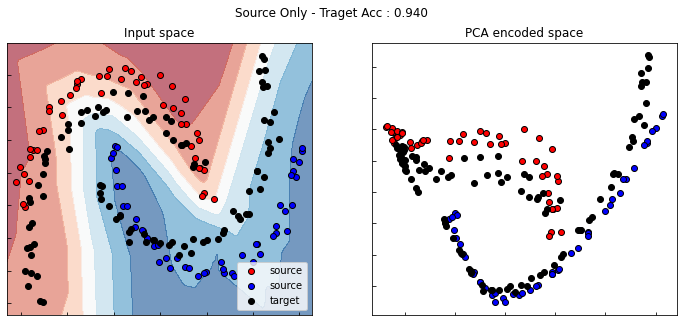

[7]:

yt_pred = src_only.predict(Xt)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = src_only.predict(X_grid).reshape(100, 100)

X_pca = np.concatenate((src_only.transform(Xs),

src_only.transform(Xt)))

X_pca = PCA(2).fit_transform(X_pca)

[8]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()

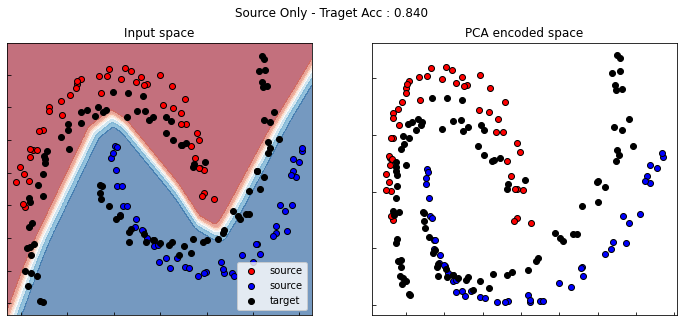

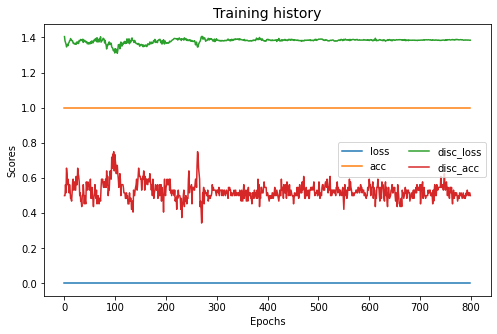

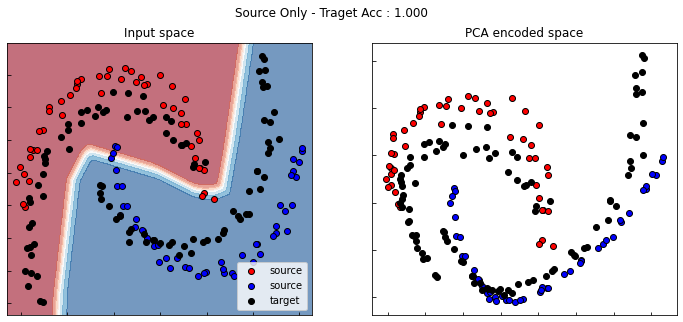

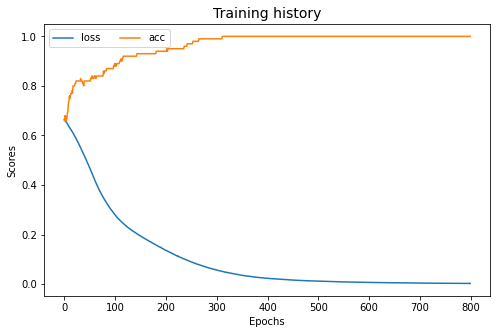

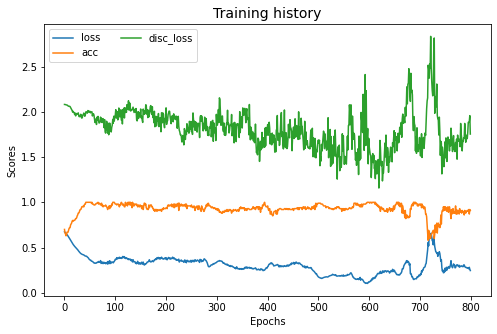

DANN

[9]:

dann = DANN(task=get_task(), loss="bce", optimizer=Adam(0.001, beta_1=0.5),

copy=True, lambda_=1., metrics=["acc"], gamma=10., random_state=0)

[10]:

dann.fit(Xs, ys, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(dann.history_).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

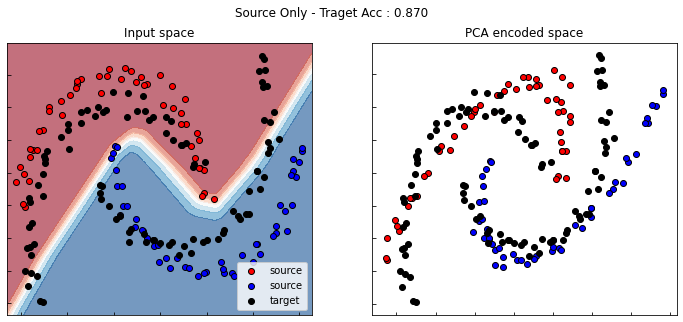

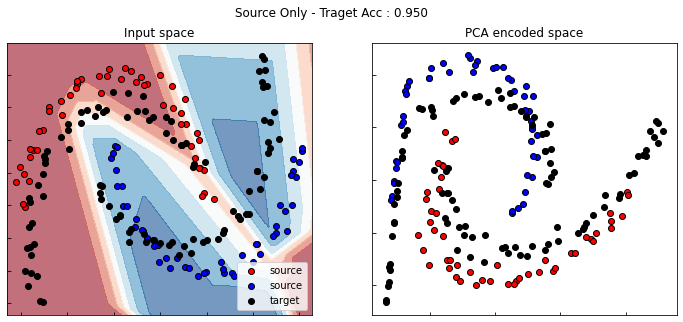

[11]:

yt_pred = dann.predict(Xt)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = dann.predict(X_grid).reshape(100, 100)

X_pca = np.concatenate((dann.encoder_.predict(Xs),

dann.encoder_.predict(Xt)))

X_pca = PCA(2).fit_transform(X_pca)

[12]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()

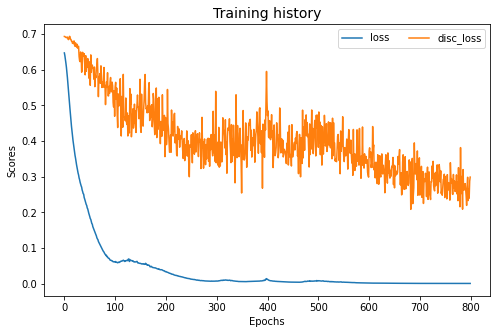

ADDA

[13]:

adda = ADDA(task=get_task(),

loss="bce", optimizer=Adam(0.001, beta_1=0.5),

copy=True, metrics=["acc"], random_state=0)

[14]:

adda.fit(Xs, ys, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(adda.history_).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

[15]:

yt_pred = adda.predict(Xt)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = adda.predict(X_grid).reshape(100, 100)

X_pca = np.concatenate((adda.encoder_.predict(Xs),

adda.encoder_.predict(Xt)))

X_pca = PCA(2).fit_transform(X_pca)

[16]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()

DeepCORAL

[17]:

dcoral = DeepCORAL(task=get_task(), lambda_=1000.,

loss="bce", optimizer=Adam(0.001, beta_1=0.5),

copy=True, metrics=["acc"], random_state=0)

[18]:

dcoral.fit(Xs, ys, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(dcoral.history_).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

[19]:

yt_pred = dcoral.predict(Xt)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = dcoral.predict(X_grid).reshape(100, 100)

X_pca = np.concatenate((dcoral.encoder_.predict(Xs),

dcoral.encoder_.predict(Xt)))

X_pca = PCA(2).fit_transform(X_pca)

[20]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()

CORAL

[21]:

coral = CORAL(estimator=get_task(), lambda_=0.,

loss="bce", optimizer=Adam(0.001, beta_1=0.5),

copy=True, metrics=["acc"], random_state=0)

[22]:

coral.fit(Xs, ys, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(coral.estimator_.history.history).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

Fit transform...

Previous covariance difference: 0.170768

New covariance difference: 0.000000

Fit Estimator...

[23]:

yt_pred = coral.predict(Xt)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = coral.predict(X_grid).reshape(100, 100)

X_pca = np.concatenate((coral.transform(Xs, domain="src"),

coral.transform(Xt, domain="tgt")))

# X_pca = PCA(2).fit_transform(X_pca)

[24]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()

MCD

[25]:

mcd = MCD(task=get_task(),

loss="bce", optimizer=Adam(0.001, beta_1=0.5),

copy=True, metrics=["acc"], random_state=0)

[26]:

mcd.fit(Xs, ys, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(mcd.history_).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

[27]:

yt_pred = mcd.predict(Xt)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = mcd.predict(X_grid).reshape(100, 100)

X_pca = np.concatenate((mcd.encoder_.predict(Xs),

mcd.encoder_.predict(Xt)))

X_pca = PCA(2).fit_transform(X_pca)

[28]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()

MDD

[29]:

mdd = MDD(task=get_task(),

loss="bce", optimizer=Adam(0.001, beta_1=0.5),

copy=True, metrics=["acc"], random_state=0)

[30]:

mdd.fit(Xs, ys, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(mdd.history_).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

[31]:

yt_pred = mdd.predict(Xt)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = mdd.predict(X_grid).reshape(100, 100)

X_pca = np.concatenate((mdd.encoder_.predict(Xs),

mdd.encoder_.predict(Xt)))

X_pca = PCA(2).fit_transform(X_pca)

[32]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()

WDGRL

[33]:

wdgrl = WDGRL(task=get_task(None), gamma=0.,

loss="bce", optimizer=Adam(0.001, beta_1=0.5),

copy=True, metrics=["acc"], random_state=0)

[34]:

wdgrl.fit(Xs, ys, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(wdgrl.history_).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

[35]:

yt_pred = wdgrl.predict(Xt)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = wdgrl.predict(X_grid).reshape(100, 100)

X_pca = np.concatenate((wdgrl.encoder_.predict(Xs),

wdgrl.encoder_.predict(Xt)))

X_pca = PCA(2).fit_transform(X_pca)

[36]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()

CDAN

[37]:

yss = np.zeros((len(ys), 2))

yss[ys==0, 0] = 1

yss[ys==1, 1] = 1

[38]:

cdan = CDAN(task=get_task(units=2, activation="softmax"), entropy=True,

loss="categorical_crossentropy", optimizer=Adam(0.001, beta_1=0.5),

copy=True, random_state=0)

[39]:

cdan.fit(Xs, yss, Xt, yt, epochs=800, batch_size=34, verbose=0);

pd.DataFrame(cdan.history_).plot(figsize=(8, 5))

plt.title("Training history", fontsize=14); plt.xlabel("Epochs"); plt.ylabel("Scores")

plt.legend(ncol=2)

plt.show()

[40]:

yt_pred = cdan.predict(Xt).argmax(1)

acc = accuracy_score(yt, yt_pred>0.5)

yp_grid = cdan.predict(X_grid)[:, 1].reshape(100, 100)

X_pca = np.concatenate((cdan.encoder_.predict(Xs),

cdan.encoder_.predict(Xt)))

X_pca = PCA(2).fit_transform(X_pca)

[41]:

cm = plt.cm.RdBu

fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(12, 5))

ax1.set_title("Input space")

ax1.contourf(x_grid, y_grid, yp_grid, cmap=cm, alpha=0.6)

ax1.scatter(Xs[ys==0, 0], Xs[ys==0, 1], label="source", edgecolors='k', c="red")

ax1.scatter(Xs[ys==1, 0], Xs[ys==1, 1], label="source", edgecolors='k', c="blue")

ax1.scatter(Xt[:, 0], Xt[:, 1], label="target", edgecolors='k', c="black")

ax1.legend()

ax1.set_yticklabels([])

ax1.set_xticklabels([])

ax1.tick_params(direction ='in')

ax2.set_title("PCA encoded space")

ax2.scatter(X_pca[:len(Xs), 0][ys==0], X_pca[:len(Xs), 1][ys==0],

label="source", edgecolors='k', c="red")

ax2.scatter(X_pca[:len(Xs), 0][ys==1], X_pca[:len(Xs), 1][ys==1],

label="source", edgecolors='k', c="blue")

ax2.scatter(X_pca[len(Xs):, 0], X_pca[len(Xs):, 1],

label="target", edgecolors='k', c="black")

ax2.set_yticklabels([])

ax2.set_xticklabels([])

ax2.tick_params(direction ='in')

fig.suptitle("Source Only - Traget Acc : %.3f"%acc)

plt.show()